Make Money with Python – AZLyrics Scrape

Upwork again offers an opportunity to demonstrate how we can make money with Python. The task proposal is a webscraping task that looks to extract the lyrics for every artist on AZLyrics.com.

Watch the YouTube tutorial…

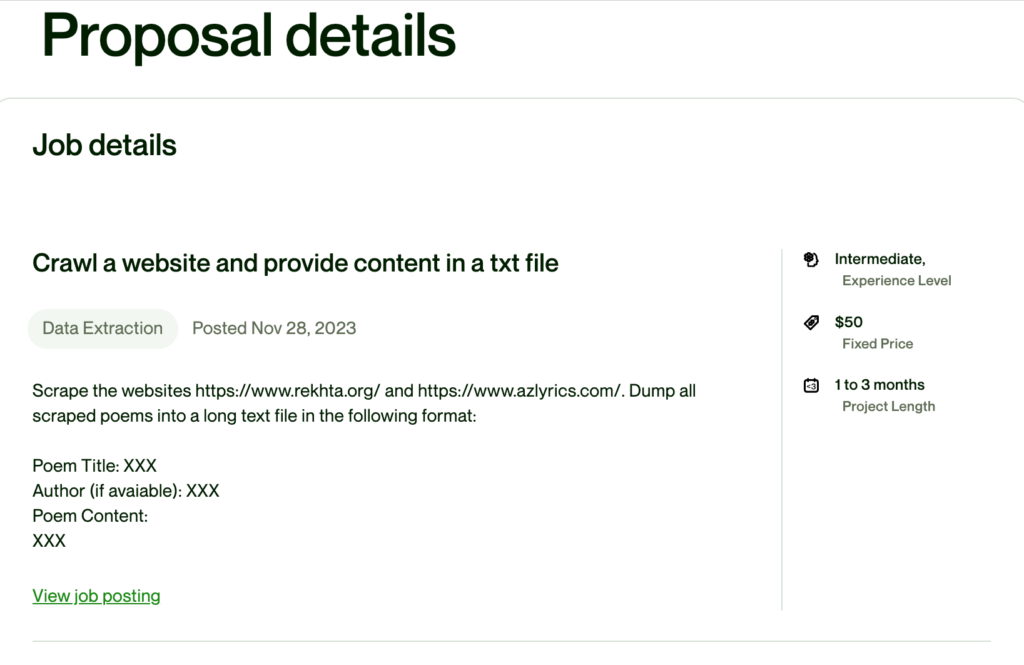

Clients’ requirements

Homepage

A review of the homepage shows us the A-Z plus ‘#’ of artists at the top of the home page. But otherwise, it’s a very plain site.

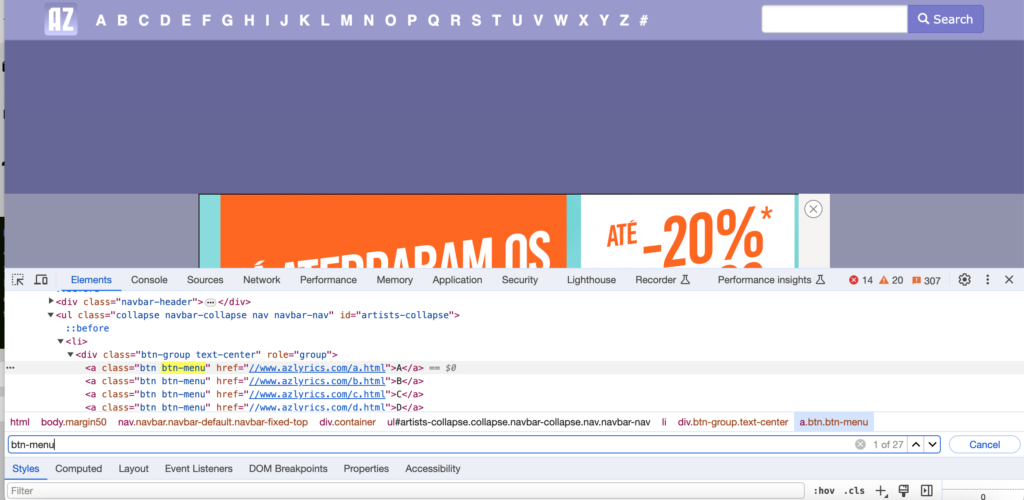

Inspect the page

Inspecting the home page we can see a list of links, one for each of the letters listing the artists (mostly) last name. They are contained in a div of class ‘btn-group’ are ‘a’ links of class ‘btn-menu’. Searching on that class in the inspect window we can see that there are 27 instances A-Z plus ‘#’.

Extract links for letters listed in the header

So we can find the ‘letter-links’ using this class as the identifier.

To start this code we’ll need some libraries>

The requests library and BeautifulSoup

We’ll need a user-agent for this site which we can get by using google and searching for ‘my user agent’. Mine is a Mozilla web browser.

Let’s start some code.

import requests

from bs4 import BeautifulSoup as bs4

user_agent = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36'

headers = {'user_agent': user_agent}

url = 'https://www.azlyrics.com/'

response = requests.get(url, headers=headers)

soup = bs4(response.text, 'html.parser')This soup allows us to extract the letter_hrefs using BeautifulSoup. I have limited the list to 2 entries so we do not overload the server. Also, the links scraped have 2 leading back slasshed so we will slice those off the link before appending it to the letter_hrefs list. We also need to add the string ‘https:’ so that the link is correctly formatted.

#get list of hrefs for artist letters

letter_hrefs=[]

letters = soup.find_all('a' ,{'class': 'btn btn-menu'} )

for letter in letters[:2]: # 2 entries only

href = letter.get('href')

letter_hrefs.append('https:' + href)

letter_hrefs #list output

['https://www.azlyrics.com/a.html', 'https://www.azlyrics.com/b.html']

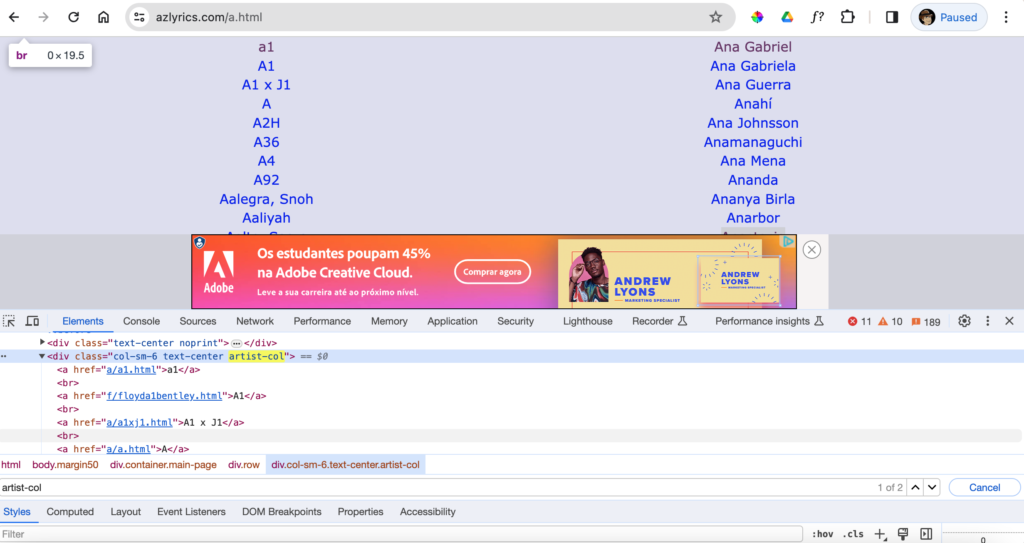

Extract the artists

Next, we want to go to each of these links in turn and request the list of artists for each. Again we are limiting ourselves to 2 links. Inspecting the page we can see that the artists are listed as hrefs in the atags of a div with class ‘artist-col’. We also need to append the base ‘URL’ so that the artist link is fully formed.

all_artists = []

for href in letter_hrefs[:2]:

response = requests.get(href, headers=headers)

artist_soup = bs4(response.text, 'html.parser')

artists_soup_list = artist_soup.find_all('div',{'class':'artist-col'})

for artist in artists_soup_list:

artist_atags = artist.find_all('a')

for atag in artist_atags:

all_artists.append(url + atag.get('href'))

all_artists[:5] # print the first of the links

['https://www.azlyrics.com/a/a1.html',

'https://www.azlyrics.com/f/floyda1bentley.html',

'https://www.azlyrics.com/a/a1xj1.html',

'https://www.azlyrics.com/a/a.html',

'https://www.azlyrics.com/a/a2h.html']Extract the songs for each artist

Next we need to request the page of the artist, convert it to ‘soup’ and extract the list of songs for each artist.

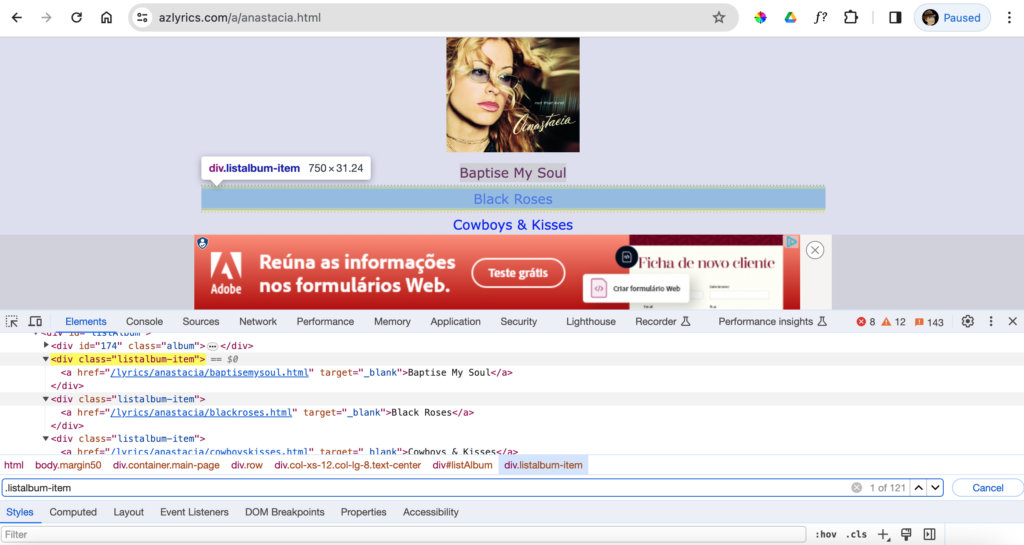

Lets inspect the page and see what we need to extract. We can see that the songs are contained in a div with class name ‘listalbum-item’. Again we will need to extract the ‘href’ and add the base URL then append it to the list.

Some of the song labels do not follow the standard pattern and already have the full URL included. These are assessed as part of an ‘if’ statement and appended to the song list correctly.

songs = []

for artist in all_artists[:2]:

response = requests.get(artist, headers=headers)

song_soup = bs4(response.text, 'html')

song_soup_list = song_soup.find_all('div',{'class':'listalbum-item'})

for song in song_soup_list:

song_atags = song.find_all('a')

for atag in song_atags:

if 'https' not in atag:

songs.append((url + atag.get('href')[1:]))

else:

songs.append(atag.get('href')[1:])

songs[:5] #lets look at 5 examples

['https://www.azlyrics.com/lyrics/a1/foreverinlove.html',

'https://www.azlyrics.com/lyrics/a1/bethefirsttobelieve.html',

'https://www.azlyrics.com/lyrics/a1/summertimeofourlives.html',

'https://www.azlyrics.com/lyrics/a1/readyornot.html',

'https://www.azlyrics.com/lyrics/a1/everytime.html']Extracting the song’s Title, Artist and Lyrics

We now have a comprehensive list of all of the songs from the website, from which we can extract the Title, Artist and Lyrics.

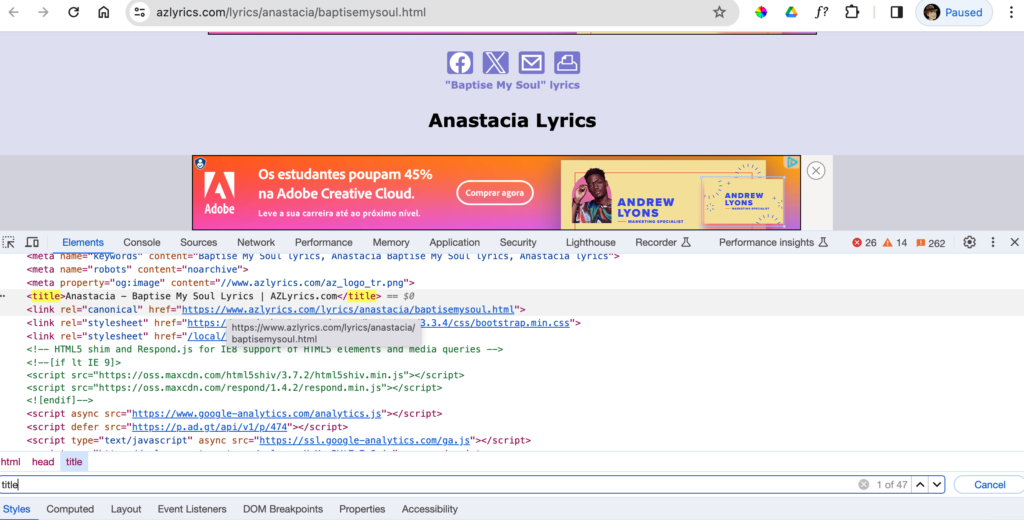

Inspecting a ‘song’ page we can see that the section has the ‘Artist’ name and “Song” title. So that allows us to extract this information with BeautifulSoup.

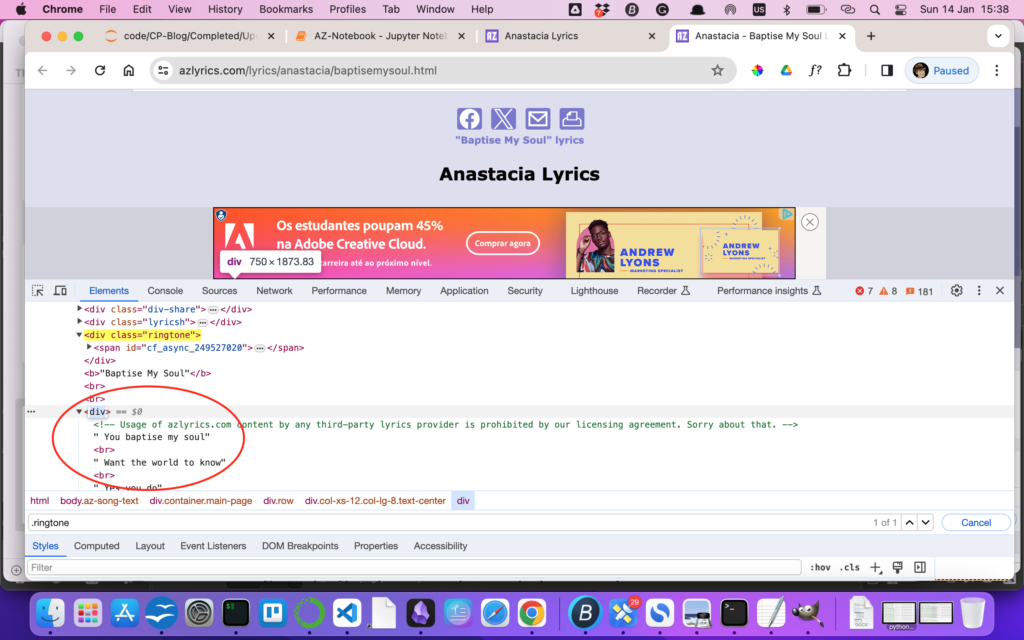

Further down the page, we can see the lyrics inside an ‘unnamed’ div. This is only slightly problematic as we can use the div prior named ‘ringtone’ and select the ‘next sibling’ to extract the lyrics.

Build a tuple for the Song, Artist and Lyrics and add to a list of Results

We will now extract the key details for every song ready for writing to a text file.

Firstly, we get the ‘title’ tag text fron the ‘head’ of the HTML structure.

Then, we need to manipulate this string.

We split the text string on the ‘|’ and take element ‘[0]’ of this list to manipulate further.

That substring is now split on the ‘-‘ with artist being the first element.

We then remove (replace with nothing) the word ‘Lyrics’ and any white space for the song name.

Finally, we create a tuple of these 3 variables, which will be added to a list in the final script.

Here we just print the tuple to demonstrate that this section of code works.

songs[:5]

all_lyrics = []

for song in songs:

response = requests.get(song, headers=headers)

song_soup = bs4(response.text, 'html.parser')

# title first

title = song_soup.find('title').text

title = title.split('|')

title = title[0].split('-')

artist = title[0]

song = title[1].replace('Lyrics','').strip()

# Extract the lyrics

lyrics = song_soup.find('div', {'class': 'ringtone'})

lyric_tag = lyrics.find_next_sibling('div')

lyrics = lyric_tag.text

song_tuple=(song, artist, lyrics)

print(song_tuple)

break('Forever In Love', 'a1 ', "\n\r\nLove leads to laughter\nLove leads to pain\nWith you by my side\nI feel good times again\n\nNever have I felt these feelings before\nYou showed me the world\nHow can I ask for more?\n\nAnd although there's confusion\nWe'll find a solution to keep my heart close to you\n\nAnd I know, yes I know\nIf you hold me, believe me\nI'll never, never ever leave\n\nAnd I know\nThere is nothing that I would not do for you\nForever be true\nAnd I know\nAlthough times can be hard\nWe will see it through\nI'm forever in love with you\n\nShow me affection\nIn all different ways\nGive you my heart\nFor the rest of my days\n\nWith you all my troubles are left far behind \nLike heaven on earth\nWhen I look in your eyes\n\nAnd although there's confusion\nWe'll find a solution\nTo keep my heart close to you\n\nAnd I know, yes I know\nIf you hold me, believe me\nI'll never, never ever leave\n\nAnd I know\nThere is nothing that I would not do for you\nForever be true\nAnd I know\nAlthough times can be hard\nWe will see it through\nI'm forever in love with you\n\nNo need to cry\nI'll be right by your side\n(Right by your side)\n\nLet's take our time\nLove won't run dry\nIf you hold me, believe me\nI'll never, never ever leave\n\nAnd I know\nThere is nothing that I would not do for you\nForever be true\nAnd I know\nAlthough times can be hard\nWe will see it through\nI'm forever in love\nAnd I know\nThere is nothing that I would not do for you\n\nForever be true\nAnd I know\n\nOh I know\nAlthough times can be hard\nWe will see it through\nI'm forever in love with you\n")Refactor for a single script in modular form

Finally, we now refactor these sections of code into a full script for use.

# AZLyrics Scraper

# Charming Python

# 14 Jan 24

import requests

from bs4 import BeautifulSoup as bs4

def get_response(url, headers):

response = requests.get(url, headers=headers)

return response

def get_artists(response):

artists = []

artist_soup = bs4(response.text, 'html.parser')

artists_soup_list = artist_soup.find_all('div',{'class':'artist-col'})

for artist in artists_soup_list:

artist_atags = artist.find_all('a')

for atag in artist_atags:

artists.append(atag.get('href'))

return artists

def get_songs(response):

songs = []

song_soup = bs4(response.text, 'html.parser')

song_soup_list = song_soup.find_all('div',{'class':'listalbum-item'})

for song in song_soup_list:

song_atags = song.find_all('a')

for atag in song_atags:

songs.append(atag.get('href'))

return songs

def get_lyrics(response):

song_tuples =[] #title, artist, content

song_soup = bs4(response.text, 'html.parser')

title = song_soup.find('title').text

title = title.split('|')

title = title[0].split('-')

artist = title[0]

song = title[1].replace('Lyrics','').strip()

# Extract the lyrics

lyrics = song_soup.find('div', {'class': 'ringtone'})

lyric_tag = lyrics.find_next_sibling('div')

lyrics = lyric_tag.text

song_tuples=(song, artist, lyrics)

return song_tuples

def main():

user_agent = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36'

headers = {'user_agent': user_agent}

url = 'https://www.azlyrics.com/'

#first soup

response = get_response(url, headers)

soup = bs4(response.text, 'html.parser')

#get list of hrefs for artist letters

letter_hrefs=[]

letters = soup.find_all('a' ,{'class': 'btn btn-menu'} )

for letter in letters[:2]: # removing leading 2 slashes

href = letter.get('href')

letter_hrefs.append('https:' + href)

# get the artists list

all_artists = []

for href in letter_hrefs[:2]: # limit the list - testing purposes

response = get_response(href, headers)

artists = get_artists(response)

all_artists = all_artists + artists

all_songs = []

for artist in all_artists[:2]: # limit the list - testing purposes

artist_url = url + artist # complete the partial URL

response = get_response(artist_url, headers)

songs = get_songs(response)

all_songs = all_songs + songs

all_song_tuples = []

for song in all_songs[:2]: # limit the list - testing purposes

song_url = url + song[1:] # remove the leading addition /

response = get_response(song_url, headers=headers)

song_tuple = get_lyrics(response)

all_song_tuples.append(song_tuple)

# append to File

with open ('songs.txt','w') as f:

for lyric in all_song_tuples:

title = lyric[0]

artist = lyric[1]

content = lyric[2]

f.write(f'Poem Title: {title}\n')

f.write(f'Author : {artist}\n')

f.write('Poem Content:\n')

f.write(content)

f.write('\n')

f.write('------------------------\n')

f.write('\n')

if __name__ == '__main__':

main()