Upwork Real Estate Scraping Task

One of the freelancing sites I like to use for both work and inspiration is Upwork.

I got the idea of using Upwork as a source of practice inspiration from Chris Mayer at the Finxter Academy and from the YouTube Channel ‘Make Data Useful.

A simple request came into my Upwork feed that disappeared almost as quickly as I made a proposal. Don’t you hate it when that happens?

In essence, the task had 2 requirements:

- Built a bot that could scrape the information on this specific page: https://mynest.ie/priceregister/Dublin

- Export the data to an Excel file.

A swift review of the page revealed that it was a relatively simple task.

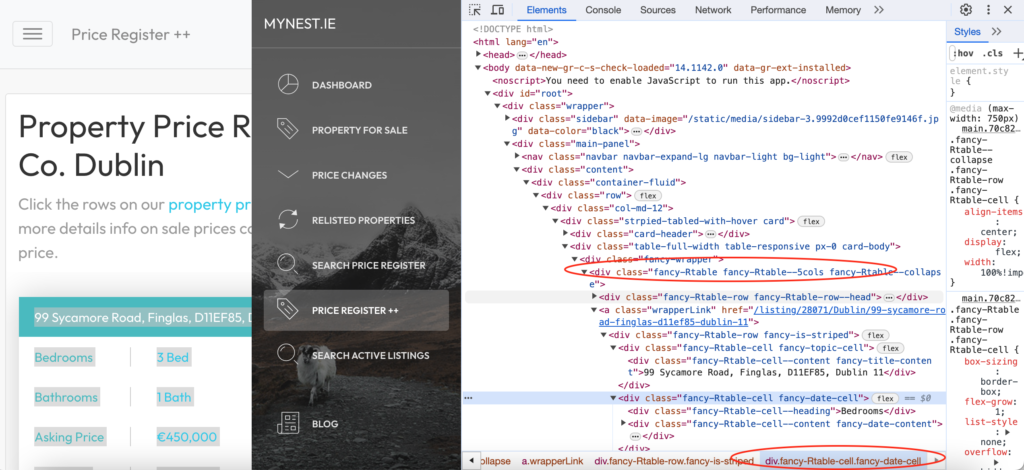

From the Chrome browser, I inspected the page.

Here we can see the data is captured in the elements tab as table data, which would be easy to scrape using Beautiful Soup….But

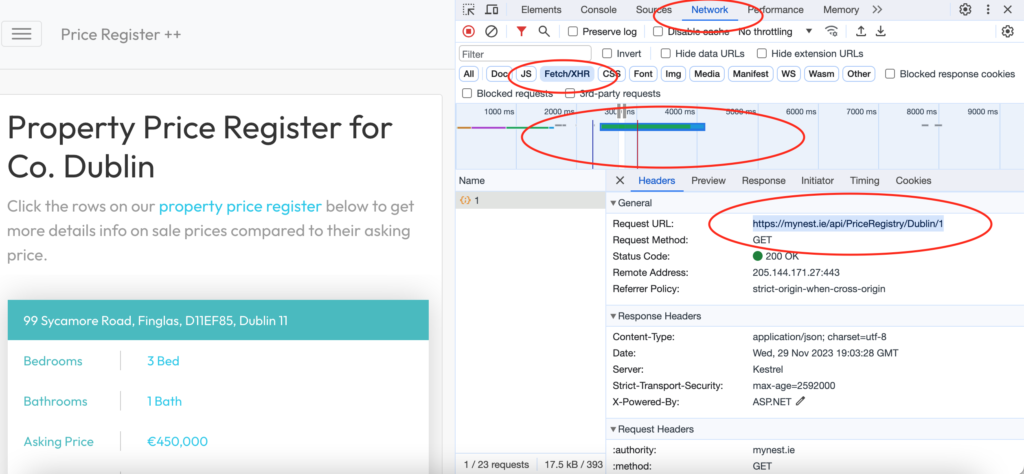

By reviewing the Network Tab and the Fetch/XHR tab and refreshing the browser, it became clear that there was an API call in the clear being made.

This may give us all the data we require so it was worth investigating further.

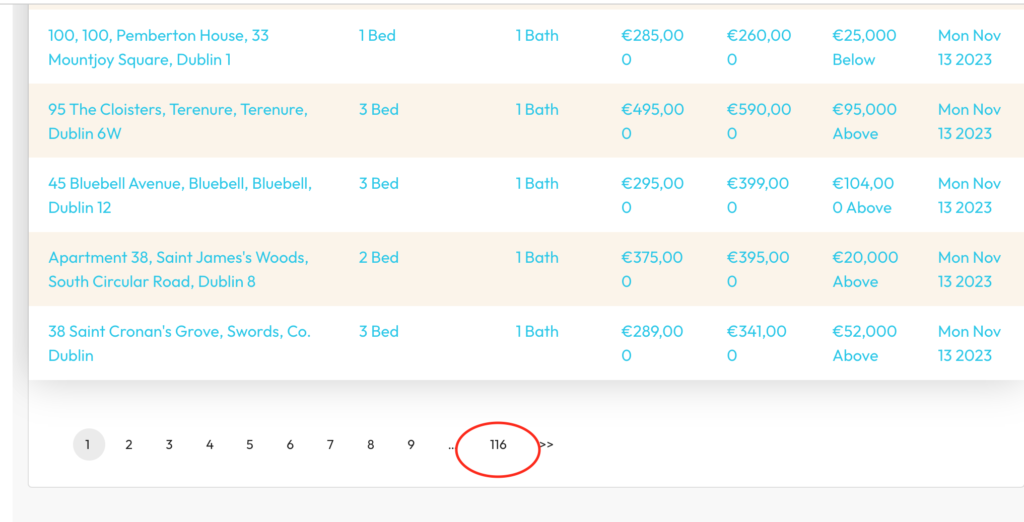

Looking at the webpage it looks like there may be 116 pages that we can scrape using the API.

So let’s see if we can see the API data…

When I’m experimenting I tend to use a Jupyter notebook as the data is retained in the notebook to allow some additional manipulation.

We are making a request so we need the requests library and we will be working with tabular data so I prefer pandas for this (provided the dataset is small).

import requests

import pandas as pd

Then let’s grab the API data for page one and have a look.

url = 'https://mynest.ie/api/PriceRegistry/Dublin/1'

response = requests.get(url, 'lxml')

response

<Response [200]>Code <Response [200]> means it’s a good request and we have a response.

So let’s look at the data. It’s an API call so the response is likely to be a json dataset.

API_data = response.json()

API_data

# The top few lines of the response are:

{'totalRecords': 9207,

'pageNumber': 1,

'records': [{'id': 28071,

'title': '99 Sycamore Road, Finglas, D11EF85, Dublin 11',

'salePrice': 480000,

'askingPrice': 450000,

'beds': '3 Bed',

'baths': '1 Bath',

'dateOfSale': '2023-11-17T00:00:00',

'difference': 30000,

'uppyDowny': 'Above'},

{'id': 71967,

'title': '8 St Davids Terrace, Off Blackhorse Avenue, Dublin 7',

'salePrice': 630000,

'askingPrice': 600000,

'beds': '3 Bed',

'baths': '1 Bath',

'dateOfSale': '2023-11-17T00:00:00',

'difference': 30000,

'uppyDowny': 'Above'},

{'id': 74048,

........

This data shows that there are 9207 records to collect, we are indeed on page number 1 and the individual records are held in a list.

The list has the dictionary key of ‘records’ so we can grab that key, value pair and see if we have the full data set.

# top few lines only

[{'id': 28071,

'title': '99 Sycamore Road, Finglas, D11EF85, Dublin 11',

'salePrice': 480000,

'askingPrice': 450000,

'beds': '3 Bed',

'baths': '1 Bath',

'dateOfSale': '2023-11-17T00:00:00',

'difference': 30000,

'uppyDowny': 'Above'},

{'id': 71967,

'title': '8 St Davids Terrace, Off Blackhorse Avenue, Dublin 7',

'salePrice': 630000,

'askingPrice': 600000,

'beds': '3 Bed',

'baths': '1 Bath',

'dateOfSale': '2023-11-17T00:00:00',

'difference': 30000,

'uppyDowny': 'Above'},

{'id': 74048,

........

and the length of this list is:

record_list = API_data['records']

record_list

8080 records on 116 pages -> 116 x 80 = 9280

With 9207 records, the last page will only have 7 records in the table…’I did check :)’

Now we just need to step through each page to get the data and extract the data, placing it in a pandas DataFrame.

import requests

import pandas as pd

# set the empty output DataFrame outside of the loops

output = pd.DataFrame()

#loop through the pages

# This should be a range of up to 117 to ensure we get pages 1 - 116

# I stopped at 3 because this was just a proof of concept

for i in range (1,3):

# the variable i is inserted into the URL as an f-String

url = f'https://mynest.ie/api/PriceRegistry/Dublin/{i}'

response = requests.get(url, 'lxml')

API_data = response.json()

record_list = API_data['records']

# step through each record and store in a DataFrame

for record in record_list:

df_dictionary = pd.DataFrame([record])

# Concatenate the output DataFrame

# with the new record DataFrame

# List is like appending an element in a list but for

# DataFrames

output = pd.concat([output, df_dictionary], ignore_index=True)

print(len(output))

160

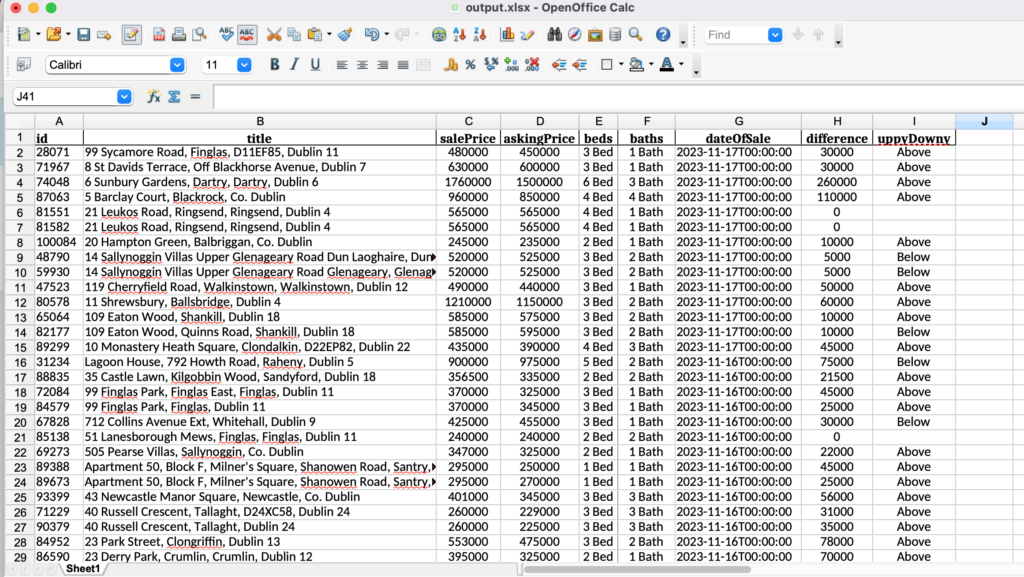

# 160 = 2 pages worth of dataAll we now need to do is to save the data to an Excel document and send it the the client!

The full, if somewhat underwhelming, code is here with the results.

import requests

import pandas as pd

output = pd.DataFrame()

for i in range (1,3):

url = f'https://mynest.ie/api/PriceRegistry/Dublin/{i}'

response = requests.get(url, 'lxml')

API_data = response.json()

record_list = API_data['records']

for record in record_list:

df_dictionary = pd.DataFrame([record])

output = pd.concat([output, df_dictionary], ignore_index=True)

output.to_excel("output.xlsx", index=False)And the contents of the Excel file are:

RESULT!

And if this had been a paid task, I’d have provided the code, the xlsx file and guidance on how to run the code so the client could have regular updates.

Charm that Python and under promise, over deliver!