Make Money with Python – Website Keyword Hunt

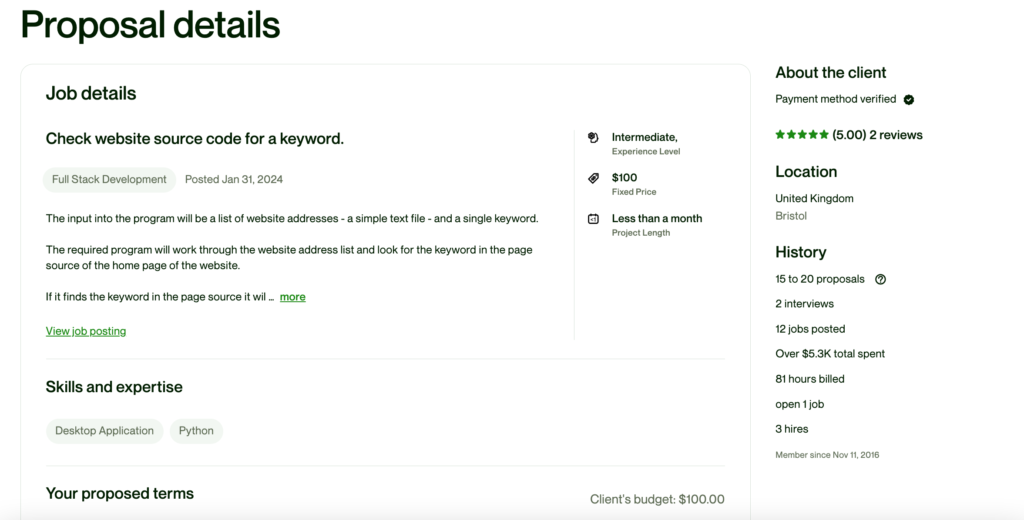

Upwork again offers an opportunity to demonstrate how we can make money with Python. This time a client wants to search a list of websites from a text file and if a keyword is present on that site, add that to a new text file.

Watch the Youtube tutorial…Link to follow

Clients’ requirements

Plan

As we have little information to work with let’s make some assumptions:

- The text file’s first line is the keyword

- The word only needs to appear in the body of the page for us to record that site as a positive.

- The list of web pages offered up might have some errors in it we need to deal with.

- The person using the script is skilled enough to edit a text file and run a Python script.

Input Text File

Let’s create a simple text file with the keyword we’re searching for as the first line and then the websites on the remaining lines until the end of the file.

Let’s call this file scan.txt

trenches

https://www.upwork.com

https://www.reddit.com

https://www.theworldwar.org/learn/about-wwi/trench-warfare

https://en.wikipedia.org/wiki/Trench_warfare

https://www.no-such-site.com

https://www.google.com

https://www.indeed.comLet’s write some code

We’ll need 3 libraries, requests and BeautifulSoup. Plus as we are also searching for keywords so let’s import re and use regular expressions for its search facility.

In a new file scanner.py add this code.

import requests

from bs4 import BeautifulSoup as bs4

import re

Then we’ll need to add code to load the lines from the file into a list we’ll call sites. We can use a context manager to do this.

with open('scan.txt', 'r') as f:

sites = []

# Iterate over the lines of the file

for line in f:

# Remove the newline character at the end of the line

site = line.strip()

# Append the line to the list

sites.append(site) User-agent

Some of the websites might need us to have a User-Agent as part of the request so we’ll add that the the header variable.

user_agent = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36'

headers ={'user-agent': user_agent}Keyword

The first entry i the text file we assume to be the keyword. So element one of the sites list will be the keyword and the sites from one onwards will be in the list so let’s update that too. We’ll create a new list in_scan that holds the websites that contain the keyword too.

keyword = sites[0]

sites = sites[1:]

in_scan=[]We’ll step through the sites and take each, make a request and then create some soup from the results.

response = requests.get(site, headers=headers)

soup = bs4(response.text, 'html.parser')We need to create a regular expression pattern. We want any number of any character until our keyword and then any number of any character after that keyword.

This gives us a regular expression pattern of ”*{keyword}*.’

So we need a Python statement of:

results = soup.body.find_all(string=re.compile('.*{0}.*'.format(keyword)), recursive=True)If the search of the soup.body has the keyword included the value of results will NOT be Null. We can use this results in an ‘if’ statement and append the website to in_scan list ready to be saved to a text file later.

We also noted at point 3 of our plan, that the websites in the text file may be poorly formated or simply not exist. So we can wrap the request statement in a ‘Try Except’ block. If the site exists and returns a code <200> then we can proceed with the keyword check. If not we have an exception that we just ‘pass’.

for site in sites:

try:

response = requests.get(site, headers=headers)

soup = bs4(response.text, 'html.parser')

results = soup.body.find_all(string=re.compile('.*{0}.*'.format(keyword)), recursive=True)

if results:

in_scan.append(site)

except:

passWrite the in_scan List to a File

After the loop has been completed, the in_scan list will hold the websites that have the keyword. We just need to write those to a text file, with a ‘\n’ to separate the address. Again we can use a context manager for this and save the results in scanned.txt. And we only need to do this if the in_scan list has some contents.

if in_scan:

with open('scanned.txt', 'w') as f:

# Iterate over the lines of the file

for line in in_scan:

f.write(line)

f.write('\n')

And that offers a working script and a quick $100.

The full code is here:

import requests

from bs4 import BeautifulSoup as bs4

import re

with open('scan.txt', 'r') as f:

sites = []

# Iterate over the lines of the file

for line in f:

# Remove the newline character at the end of the line

site = line.strip()

# Append the line to the list

sites.append(site)

user_agent = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36'

headers ={'user-agent': user_agent}

keyword = sites[0]

sites = sites[1:]

in_scan=[]

for site in sites:

try:

response = requests.get(site, headers=headers)

soup = bs4(response.text, 'html.parser')

results = soup.body.find_all(string=re.compile('.*{0}.*'.format(keyword)), recursive=True)

if results:

in_scan.append(site)

except:

pass

if in_scan:

with open('scanned.txt', 'w') as f:

# Iterate over the lines of the file

for line in in_scan:

f.write(line)

f.write('\n')